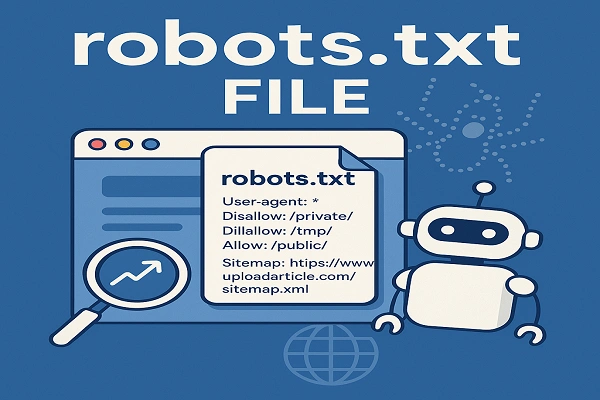

Robots.txt Files UploadArticle.com: A Quick SEO Guide

In the vast and dynamic world of the internet, search engine optimization (SEO) plays a pivotal role in determining the visibility and accessibility of your website. One of the fundamental tools in SEO is the Robots.txt Files UploadArticle.com file. This simple yet powerful file guides search engine crawlers on how to interact with your site. In this article, we’ll delve into the intricacies of the robots.txt file, its significance, and how to effectively utilize it for your website, particularly focusing on platforms like UploadArticle.com.

What is a robots.txt File?

A robots.txt file is a plain text file placed at the root of your website’s domain. Its primary function is to instruct web crawlers (also known as spiders or bots) about which pages or sections of your site should not be crawled or indexed. This is crucial for managing server load, preventing the indexing of duplicate content, and safeguarding sensitive information.

For instance, a basic robots.txt file might look like this:

In this example:

-

User-agent: *applies the rules to all web crawlers. -

Disallow: /private/andDisallow: /tmp/prevent crawlers from accessing the specified directories. -

Allow: /public/permits access to the public directory. -

Sitemap:provides the location of the site’s sitemap, aiding crawlers in discovering all pages.

Read More : uploadarticle. com

The Importance of robots.txt Files

1. Managing Crawl Budget

Search engines allocate a certain amount of resources to crawl each website, known as the crawl budget. By using a robots.txt file effectively, you can ensure that search engines focus their resources on your most important pages, thereby improving your site’s SEO performance.

2. Preventing Indexing of Duplicate Content

Duplicate content can harm your site’s SEO rankings. The robots.txt file helps prevent search engines from crawling and indexing duplicate content, such as print versions of pages or session IDs, which can lead to penalties.

3. Protecting Sensitive Information

While robots.txt isn’t a security measure, it can be used to prevent search engines from accessing sensitive areas of your site, like admin panels or private directories. However, it’s important to note that this doesn’t secure the content; it’s merely a request for crawlers to avoid these areas.

4. Enhancing Site Performance

By blocking crawlers from accessing unnecessary or resource-heavy pages, you can reduce server load and improve your site’s performance, leading to faster load times and better user experience.

How to Create and Upload a robots.txt File on UploadArticle.com

Creating and uploading a robots.txt file on platforms like UploadArticle.com is straightforward. Here’s a step-by-step guide:

Step 1: Create the robots.txt File

Using a text editor (like Notepad or TextEdit), create a new file named robots.txt. Add the necessary directives based on your site’s structure and SEO strategy. For example:

Step 2: Upload the File to Your Website

Once your robots.txt file is ready, you need to upload it to the root directory of your website. On UploadArticle.com, this typically involves:

-

Logging into your account.

-

Navigating to the file manager or FTP section.

-

Uploading the

robots.txtfile to the root directory (e.g.,https://www.uploadarticle.com/robots.txt).

Step 3: Verify the File’s Accessibility

After uploading, ensure that the file is accessible by visiting https://www.uploadarticle.com/robots.txt in your browser. If you see the contents of your robots.txt file, it’s correctly uploaded.

Step 4: Test the File

Use tools like Google’s Robots.txt Tester to check if your file is correctly formatted and if it’s effectively blocking or allowing the intended pages.

Read More : uploadarticle.blogspot.com

Best Practices for Crafting an Effective robots.txt File

To maximize the benefits of your robots.txt file, consider the following best practices:

1. Be Specific with Directives

Instead of broadly disallowing entire directories, specify individual pages or sections that you want to block. This ensures that important content isn’t unintentionally excluded.

2. Use the Allow Directive Wisely

If you disallow a directory but want to allow access to a specific file within it, use the Allow directive. For example:

3. Regularly Update the File

As your website evolves, so should your robots.txt file. Regularly review and update it to reflect changes in your site’s structure and SEO strategy.

4. Avoid Blocking CSS and JavaScript Files

Blocking CSS and JavaScript files can hinder search engines from rendering your pages correctly, potentially affecting your rankings. Only block these files if absolutely necessary.

5. Monitor Crawl Errors

Regularly check Google Search Console for crawl errors related to your robots.txt file. Address any issues promptly to ensure optimal crawling and indexing.

Common Mistakes to Avoid

While working with robots.txt files, be mindful of these common pitfalls:

-

Blocking Important Content: Accidentally disallowing access to crucial pages can harm your site’s SEO performance.

-

Incorrect Syntax: Ensure that your directives are correctly formatted to avoid misinterpretation by crawlers.

-

Overusing Wildcards: While wildcards can be powerful, overusing them can lead to unintended consequences.

-

Ignoring Crawl Errors: Failing to address crawl errors can impede search engines from indexing your site effectively.

Conclusion

The Robots.txt Files UploadArticle.com file is a vital component in managing how search engines interact with your website. By understanding its functions and best practices, you can enhance your site’s SEO performance, protect sensitive information, and ensure efficient use of your crawl budget. For platforms like UploadArticle.com, leveraging the robots.txt file effectively can lead to better visibility and user experience. Always remember to regularly review and update your robots.txt file to align with your evolving SEO strategies and website structure.

Read More : seo agency in australia uploadaticle

FAQs About Robots.txt Files UploadArticle.com

1. Can robots.txt prevent my site from being indexed?

No, robots.txt only controls crawling. To prevent indexing, use the noindex directive in your HTML or HTTP headers.

2. Is robots.txt a security measure?

No, robots.txt is a public file accessible to anyone. It merely requests crawlers to avoid certain areas; it doesn’t secure content.

3. Can I block specific search engines using robots.txt?

Yes, by specifying the User-agent directive, you can target specific crawlers. For example: